Assured and optimized resilience is best

One of several excellent heads-ups in the latest issue of RISKS concerns an IEEE report on Facebook's live testing of their data center resilience arrangements.

Facebook's SWAT team, business continuity pro's, tech crew and management all deserve congratulating on not just wanting to be resilient, but making it so, proving that it works, and systematically improving it so that it works well.

However, I am dismayed that such an approach is still considered high-risk and extraordinary enough to merit both an eye-catching piece in the IEEE journal and a mention in RISKS. Almost all organizations (ours included*) should be sufficiently resilient to cope with events, incidents and disasters - the whole spectrum, not just the easy stuff. If nobody is willing to conduct failover and recovery testing in prime time, they are admitting that they are not convinced the arrangements will work properly - in other words, they lack assurance and consequently face high risks.

About a decade ago, I remember leading management in a mainstream bank through the same journey. We had an under-resourced business continuity function, plus some disaster recovery arrangements, and some of our IT and comms systems were allegedly resilient, but every time I said "OK so let's prove it: let's run a test" I was firmly rebuffed by a nervous management. It took several months, consistent pressure, heavyweight support from clued-up executive managers and a huge amount of work to get past a number of aborted exercises to the point that we were able to conduct a specific disaster simulation under strictly controlled conditions, in the dead of night over a bank holiday weekend. The simulation threw up issues, as expected, but on the whole it was a success. The issues were resolved, the processes and systems improved, and assurance increased.

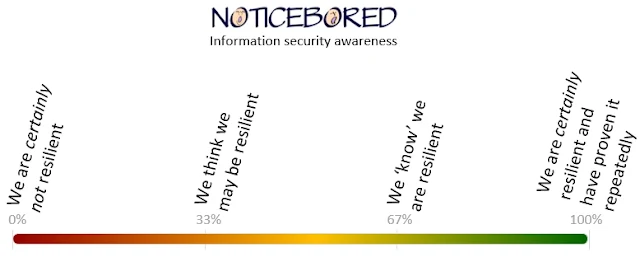

At that point, management was fairly confident that the bank would survive ... a specific incident in the dead of night over a bank holiday weekend, provided the incident happened in a certain way and the team was around to pick up the pieces and plaster over the cracks. I would rate the confidence level at about 30%, some way short of the 50% "just about good enough" point, and well shy of the 100% "we KNOW it works, and we've proven it repeatedly under a broad range of scenarios with no issues" ultimate goal ...

My strategy presentations to management envisaged us being in the position that someone (such as the CEO, an auditor or a careless janitor) could wander into a computer suite on an average Wednesday morning and casually 'hit the big red button', COMPLETELY CONFIDENT that the bank would not instantly drop offline or turn up in the news headlines the next day. Building that level of confidence meant two things: (1) systematically reducing the risks to the point that the residual risks were tolerable to the business; and (2) increasing assurance that the risks were being managed effectively. The strategy laid out a structured series of activities leading up to that point - stages or steps on the way towards the 100% utopia.

The sting in the tail was that the bank operated in a known high-risk earthquake zone, so a substantial physical disaster that could devastate the very cities where the main IT facilities are located is a realistic and entirely credible scenario, not the demented ramblings of a paranoid CISO. Indeed, not long after I left the bank, a small earthquake occurred, a brand new IT building was damaged and parts of the business were disrupted, causing significant additional costs well in excess of the investment I had proposed to make the new building more earthquake-proof through the use of base isolators. Management chose to accept the risk rather than invest in resilience, and are accountable for that decision.

* Ours may only be a tiny business but we have business-critical activities, systems, people and so forth, and we face events, incidents and disasters just like any other organization. Today we are testing and proving by using our standby power capability: it's not fancy - essentially just a portable generator, some extension cables and two UPSs supporting the essential office technology (PCs, network links, phones, coffee machine ...), plus a secondary Internet connection - but the very fact that I am composing and publishing this piece proves that it works since the power company has taken us offline to replace a power pole nearby. This is very much a live exercise in business continuity through resilience, concerning a specific category of incident. And yes there are risks (e.g. the brand new UPSs might fail), but the alternative of not testing and proving the resilience arrangements is riskier.

* Ours may only be a tiny business but we have business-critical activities, systems, people and so forth, and we face events, incidents and disasters just like any other organization. Today we are testing and proving by using our standby power capability: it's not fancy - essentially just a portable generator, some extension cables and two UPSs supporting the essential office technology (PCs, network links, phones, coffee machine ...), plus a secondary Internet connection - but the very fact that I am composing and publishing this piece proves that it works since the power company has taken us offline to replace a power pole nearby. This is very much a live exercise in business continuity through resilience, concerning a specific category of incident. And yes there are risks (e.g. the brand new UPSs might fail), but the alternative of not testing and proving the resilience arrangements is riskier. That said, we still need to review our earthquake kit, check our health insurance, test our cybersecurity arrangements and so forth, and surprise surprise we have a strategy laying out a structured series of activities leading up to the 100% resilience goal. We eat our own dogfood.

UPDATE: today (15th Sept) we had an 'unplanned' power cut for several hours, quite possibly a continuation or consequence of the planned engineering work that caused the outage yesterday. Perhaps the work hadn't been completed on time so the engineers installed a temporary fix to get us going overnight and returned today to complete the work ... or maybe whatever they 'fixed' failed unexpectedly ... or maybe the engineers disturbed something in the course of the work ... or maybe this was simply a coincidence. Whatever. Today the UPSs and generator worked flawlessly again, along with our backup Internet and phone services. Our business continued, with relatively little stress thanks to yesterday's activities.